On Disk, The Devil’s In The Details

Introduction

During red team operations and penetration tests, there are occasions where you need to drop an executable to disk. It’s usually best to stay in memory and avoid this if possible, but there are plenty of situations where it’s unavoidable, like DLL sideloading. In these cases, you typically drop a custom malicious PE file of some sort. Being on disk instead of in memory opens you up to the world of AV static analysis and the set of challenges bypassing it presents. There are many resources on the net about avoiding AV signatures, say for example Metasploit shellcode, by using string obfuscation, encryption, XORs, pulling down staged payloads over the network, shrinking the import table, polymorphic encoding, etc. I’m going to assume you’ve done your due diligence and handled the big stuff. However there are some other more subtle indicators and heuristics AV can use to help spot a malicious binary when it is present on disk. These are what this post is all about.

Data About Data

When you compile a binary, whether it’s a DLL or an EXE, the compiler will automatically include a certain amount of metadata about the resulting binary, such as the compliation date and time, compiler vendor, debug files, file paths, etc. This “data about data” can reveal a lot about an executable, especially an executable never encountered by a given AV engine.

The AV engine’s job is to take files, inspect the metadata, apply heuristics, and determine liklihood of it being malicious. Clearly the more metadata and information we leave in our dropped binary, the more likely it is to be flagged. We are automatically at a disadvantage, since we are writing custom code that has never been seen by the AV engine and its file hash is unfamiliar. Compare that to a very commonly-seen file, like a Firefox installer MSI with a known hash and metadata, seen by many installations of the AV software across customer locations, and you can see how a custom compiled binary can stick out.

All is not lost, however. AV can’t simply declare every newly-seen file malicious, as all known-good files start off as unknown at some point. So the AV must use imperfect signatures, metadata, and heuristics to make a good vs. bad determination. We want to remove as many pieces of information that could push us towards a positive dectection as we can.

Now will making these changes make your malicious payload FUD and guaranteed to slip through? Not at all. If you’re dropping unencrypted Cobalt Strike shellcode all over the place, you’re done. But as AV and EDR gets better, the more important it is to give them as little information as possible. And who wants to burn a perfectly crafted custom payload beacuse you left some silly string in? It’s not a magic bullet, it’s not even an ordinary unmagical bullet, but every little bit helps.

Code Signing

One way developers can help signal to Windows and AV engines that their software is not malicious is by using code signing certs. These are (supposed to be) expensive and difficult to obtain x.509 certificates that can be used to cryptographically sign a compiled binary. The idea is that only the legitimate and properly vetted owner should have access to the private key, and must have legitimately signed the file, indicating that it is trustworthy. This gives AV a high fidelity way of identifying the author.

There are two problems with this approach though. Stuxnet famously stole multiple valid code signing certs in order to sign its payloads and help avoid detection. Certificate private keys occasionally end up committed to Github as well. So a validly signed cert is never a 100% guarantee of non-maliciousness.

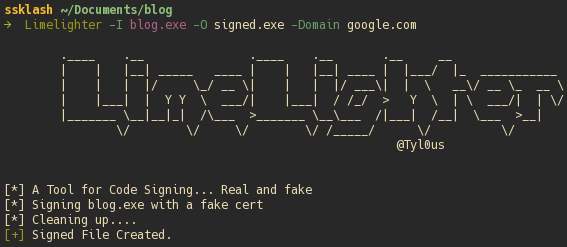

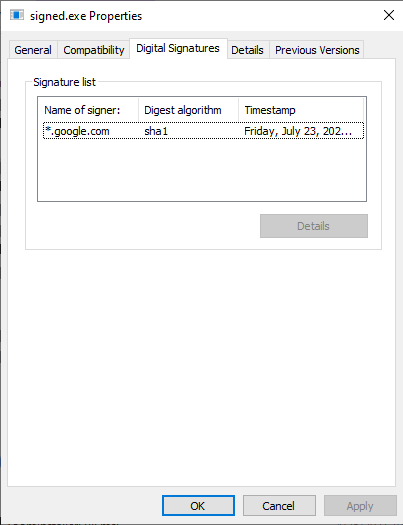

The other issue is that sometimes AV engines fail to check the validity of a certificate at all, instead simply checking to see if the file has been signed. Which means as long as we can sign our payload with any old self-signed cert, we would pass this particular check. Lucky for us, anyone can generate a code signing cert and use it to sign their malware. It’s free and easy to automate. This Stack Overflow post shows how to create one on Windows and how to use signtool to sign a binary. On Linux, you can use Limelighter to sign with an existing certificate, or download the cert from a website and use it as a code signing cert:

And the resulting self-signed binary:

CarbonCopy is another good tool that can use website certificates to sign a file.

File Properties

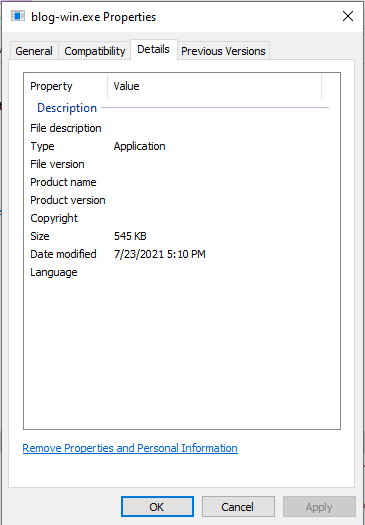

Another piece of data, or rather lack of data, are the file properties of an executable. By default, this information is not included when you compile a binary. It looks like this:

It must be added via a resource file and compiled into the binary. This missing information is another, somewhat low fidelity, indicator that a file may not have been produced by a legitimate software vendor, and is therefore more likely to be malicious. Admittedly, this is probably not a huge red flag to most AV, but it’s easy enough to implement, so why not? The details add up.

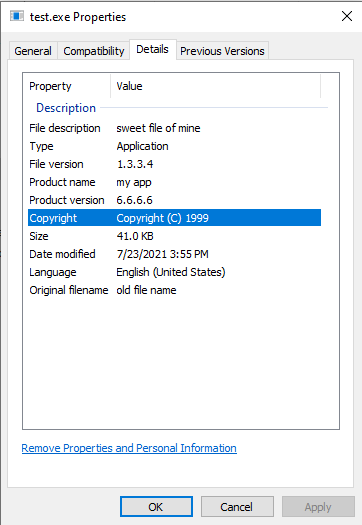

Creating the resource file is not the most straightforward process. I found the easiest way was to let Visual Studio create it for me. You create a new item of the type resource, and then add a Version resource. Tweak it how you’d like, and the save the resulting Resource.rc file. I’ve created one and stripped out the extraneous lines for easy use here.

Here are two gists for creating the object file to include with your compilation sources: Windows and Linux. Thanks to Sektor7 for the Windows version.

Here is the result of including a resource file during compilation:

The Rich Header

The Rich header is an undocumented field within the PE header of Windows executables that were created by a Microsoft compiler. It captures some information about the compilation process, including the compiler and linker versions, number of files compiled, objects created, etc. It has been covered in some depth in several places, but a good recap and analysis is here.

Because this header encodes rather specific information about an executable, it provides a way of tracking it between systems. AV engines can use it match up strains of malware, attribution, etc. Some threat actors are aware of this fact however, and try to use it to their advantage. The most well-known case of this was the OlympicDestroyer malware, which spoofed its Rich header to resemble the Lazarus group.

I don’t have code or specific recommendations here, mainly because what you might want to do with the Rich header will depend on what you want to acheive. It is worth knowing about, because it is an indicator that you can use, or have used against you. For instance, the GCC compiler doesn’t include the Rich header. If the environment you’re operating in is dominated by Windows machines, much of the software runnning was likely compiled by Visual Studio. Running a GCC or MinGW compiled binary alone isn’t enough on its own to get you caught, but it may make you stand out, which can often mean the same thing. So you may want to add a Rich header, or remove it, or change it to emulate an adversary, or do nothing at all with it. Just know that it exists, and be aware of what it might tell the opposition about your file. Knowledge is power after all.

If you would like to at least remove the Rich header, peupdate can handle that for you. Another option would be one of the PE parsing Python libraries.

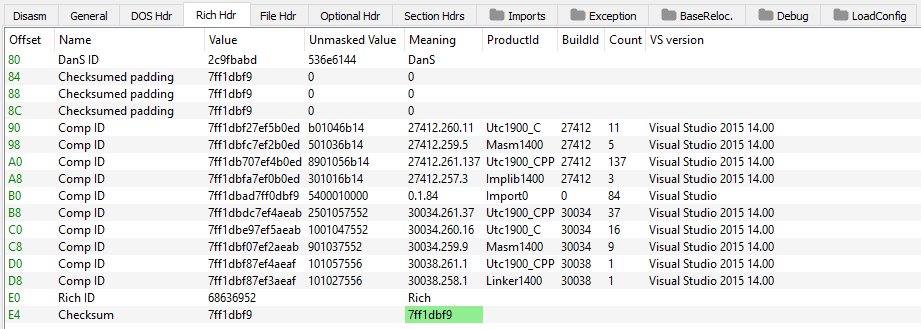

Here is a breakdown of the Rich header, courtesy of the wonderful PE-bear. Note the references to masm and the Visual Studio version used.

Compile Times

Another indicator AV can use to help determine maliciousness in a file is the compilation time. The idea is that most software will have been compiled some time in the past before it is used. A very recently compiled binary, say within the past day or even hour, could look very suspicious, especially running on Bob in HR’s machine, who probably isn’t doing any programming. Even a signed binary with no other obvious signs of being malicious, depending on the compile time, can look mighty strange. As always, context matters. If by chance you’ve breached the development network, new binaries are business as usual.

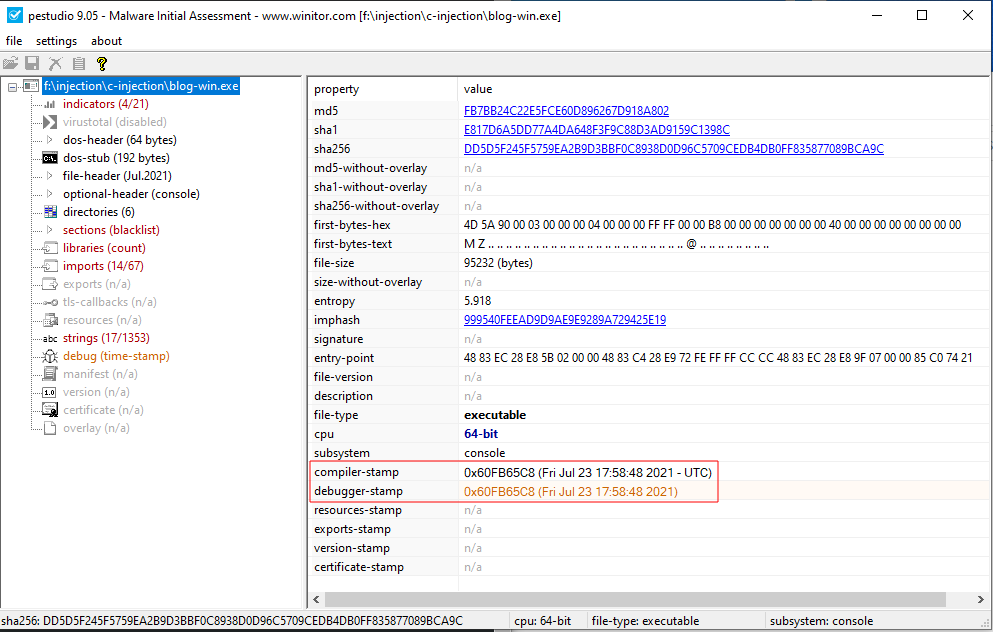

One complication with timestamps in a PE file is the sheer number of them. This post puts the number at 8, though some are not always included, or are simply for managing bound imports and are not full timestamps. A tool is included for viewing them, and tools like PEStudio are great for this as well. Two commonly modified timestamps are the TimeDateStamp of the COFF File Header, and the TimeDateStamp field of the debug directory:

Like the Rich header, timestamps are not something that must be changed. They are just another piece of information to be aware of, something that can tell the blue team a story. You get to decide what story is appropriate, depending on the context of the engagment.

For an excellent deep dive into timestamps, I recommend this blog post.

Conclusion

The main theme of this post has been about knowing the little details of the malware you write, and the context in which you deploy that malware. Context matters, details add up, and they can make or break an engagement. I hope this list of subtleties will come in handy on your next engagement.